Contenus

TogglePerceptron

The Perceptron is inspired by the processing of information from a single neural cell (called a neuron). A neuron accepts input signals through its axon, which transmits the electrical signal to the cell body. Dendrites transmit the signal to synapses, which are the connections of dendrites in one cell to axons in other cells. In a synapse, electrical activity is converted into molecular activity (molecules of neurotransmitters crossing the synaptic cleft and binding to receptors). Molecular bonding develops an electrical signal which is transmitted to the axon of connected cells.

The information processing objective of the technique is to model a given function by modifying the internal weights of the input signals to produce an expected output signal. The system is trained using a supervised learning method, where the error between the system output and a known expected output is presented to the system and used to change its internal state. The state is maintained in a set of weights on the input signals. The weights are used to represent an abstraction of the mapping of the input vectors to the output signal for the examples to which the system was exposed during training.

The Perceptron is made up of a data structure (weight) and separate procedures for forming and applying the structure. The structure is really just a weight vector (one for each expected input) and a bias term.

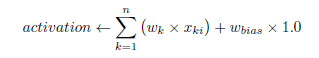

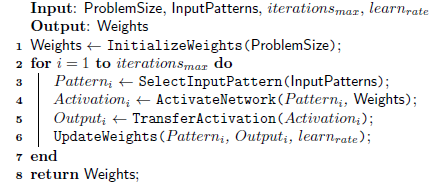

The following algorithm provides a pseudocode for learning the Perceptron. A weight is initialized for each input plus an additional weight for a constant bias which is almost always set to 1.0. Network activation at a given input pattern is calculated as follows:

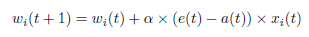

where n is the number of weights and entries, x_ki is the k-th attribute on the i-th entry pattern, and w_bias is the bias weight. The weights are updated as follows:

where w_i is the i-th weight at times t and t + 1, α is the learning rate, e (t) and a (t) are the actual expected output at time t, and x_i is the i-th input . This updating process is applied to each weight in turn (as well as to the skew weight with its entry).

The Perceptron can be used to approximate arbitrary linear functions and can be used for problems of regression or classification. The Perceptron cannot learn a nonlinear mapping between input and output attributes. The XOR problem is a classic example of a problem that the Perceptron cannot learn.

The input and output values must be normalized such that each x is in [0; 1]. The learning rate α in [0; 1] controls the amount of change each error has on the system, lower teachings are common such as 0.1. Weights can be updated online (after exposure to each input pattern) or in batches (after a fixed number of patterns have been observed). Batch updates should be more stable than online updates for some complex issues.

A bias weight is used with a constant input signal to ensure the stability of the learning process. A stepwise transfer function is commonly used to transfer activation to a binary output value of 1 <–activation ≥ 0, otherwise 0. It is recommended to expose the system to input patterns in random order other than each iteration. Initial weights are usually small random values, usually in [0; 0.5].