Contents

ToggleConstraint programming

The constraint programming solves decision-making problems. An optimization problem is solved successively after several decision problems. For example to determine a shortest way, we will seek to find a path of less than 100 (possible), then less than 50 (impossible), then less than 75, etc. until the optimal solution is found. PPC has a broader field of action than exact methods or metaheuristics.

Definition

PPC solves a constraint satisfaction problem (CSP).

Let's take an example of a triplet:

- variables: A, B, C, D

- areas :

- DTO = {1,4,5,8}

- DB = {2,3,5,7,9}

- DVS = {4,8,9}

- DD = {1,4,5,7,9}

- constraints:

- VS1(A, C): {(1.7); (1.9); (5.9); (8.9)}

- VS2(A, D): (1.1); (1.5); (5.5); (5.9); (8.9)}

- VS3(C, D): {(4.1); (8.1); (9.7)}

- VS4(B, D): {(2.7); (2.9); (5.8); (7.9); (9.9)}

It is possible to code the constraints in extension, by a set of tuples, or in intention, using operators whose semantics are known. An instantiation is a complete assignment of values to variables, for example { , , , }. Instantiation is a valid solution if the values given to the variables of which such as all the constraints are verified.

Naive resolution: Generate & Test

If we have a problem with n variables, each can only take two values, the processor does 1,000,000,000 calculations per second. For 30 variables, the resolution take at most 1s, for 50 variables, we go to 11 days; for 70 variables it is necessary to wait 317 centuries.

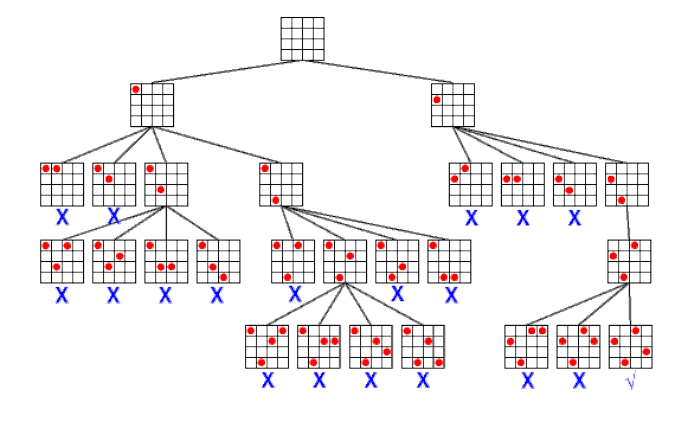

Backtrack resolution

It is akin to Branch & Cut.

This method does not generate the entire possibility tree unlike the naive method. On the other hand all the constraints are tested with each partial assignment and the convergence time also depends on the order of the variables.

Resolution by stress propagation

For example let us take x between 0 and 10 and y between 3 and 9; then with the constraint x> y we can restrict the domain of X between 4 and 10.

There are three cases where information is propagated:

- when a domain is reduced

- when one of the domain terminals is changed

- when a domain is a singleton.

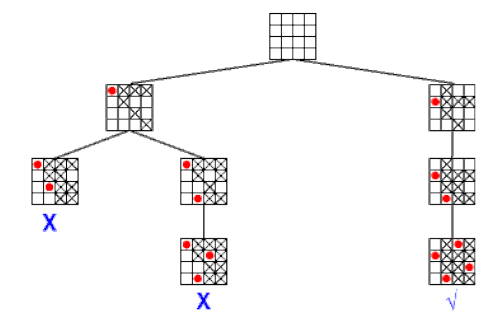

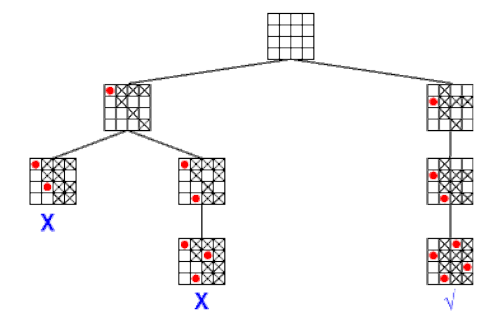

It is then possible to propagate the information once (node-consistency), twice (arc-consistency) or more.

In the node-consistency, for each variable Xi unaffected in A, we subtract from DXi any value v such that the assignment A ∪ {(Xi, v)} is inconsistent. The authorized values are propagated in depth.

In the arc-consistency, for each variable Xi unaffected in A, we subtract from DXi any value v such that there is a variable Xj unassigned for which, for any value w of DXj, the assignment A ∪ {(Xi, v); (Xj, w)} is inconsistent. The authorized values are propagated in depth.

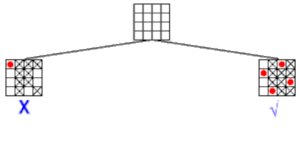

The larger the comparison tuple, the greater the computation time to define the valid domain of the variables. Arc-consistency is less efficient than knot-consistency if the domains are large.