Contents

ToggleCorrelation and Regressions

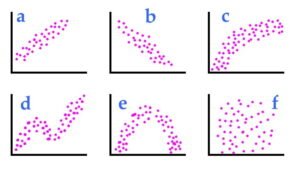

In statistics, correlation or dependence is any statistical relationship, causal or not, between two random variables or bivariate data. Although in the broadest sense "correlation" can indicate any type of association, in statistics it normally refers to the degree to which a pair of variables is linearly related. Familiar examples of dependent phenomena include the correlation between the size of parents and their offspring, and the correlation between the price of a good and the quantity that consumers are willing to buy, as represented in the so-called curve of request.

Correlations are useful because they can indicate a predictive relationship that can be used in practice. For example, an electric utility may produce less electricity in mild weather depending on the correlation between electricity demand and weather conditions. In this example, there is a causal relationship, as extreme weather causes people to use more electricity for heating or cooling. However, in general, the presence of a correlation is not sufficient to infer the presence of a causal relationship (i.e. correlation does not imply causation).

In statistical modeling, regression analysis is a set of statistical processes for estimating relationships between a dependent variable (often called an "outcome" or "response" variable) and one or more independent variables (often called "predictors"). , 'covariates', 'explanatory variables' or 'characteristics'). The most common form of regression analysis is linear regression, in which one finds the line (or more complex linear combination) that best fits the data according to a specific mathematical criterion.

For example, the ordinary least squares method calculates the single line (or hyperplane) that minimizes the sum of the squared differences between the true data and that line (or hyperplane). For reasons math (see linear regression), this allows the researcher to estimate the conditional expectation (or population mean value) of the dependent variable when the independent variables take on a given set of values. Less common forms of regression use slightly different procedures to estimate alternative location parameters (e.g., quantile regression or necessary condition analysis) or estimate conditional expectation across a larger collection of nonlinear models ( for example, nonparametric regression).

Regression analysis is primarily used for two conceptually distinct purposes.

First, regression analysis is widely used for prediction and forecasting, where its use significantly overlaps with the field of machine learning.

Second, in some situations, regression analysis can be used to infer causal relationships between independent and dependent variables. It is important to note that regressions by themselves only reveal the relationships between a dependent variable and a set of independent variables in a fixed data set. To use regressions for prediction or to infer causal relationships, respectively, a researcher must carefully justify why existing relationships have predictive power for a new context or why a relationship between two variables has a causal interpretation. The latter is particularly important when researchers hope to estimate causal relationships using observational data.