- Sum of the quadratic error

- Dispersion criteria

- Category utility metric

- Cutting measures

- Ball Hall

- Banfeld-Raftery

- Condorcet criterion

- Criterion C

- Calinski-Harabasz

- Davies-Bouldin

- Det_Ratio

- Dunn

- GDImn

- Gamma

- G+

- Ksq_DetW

- Log_Det_Ratio

- Log_SS_Ratio

- McClain-Rao

- PBM

- Biserial point

- Ratkawsky-Lance

- Ray-Turi

- Scott Symons

- SD_Scat

- SD_Dis

- S_Dbw

- Silhouette

- Trace W

- WiB trace

- Wemmert-Gançarski

- Xie-Beni

- Hierarchical agglomerative

- Hierarchical divisive

- Relative hierarchical

- Squared error-based

- pdf estimate via mixture densities

- Graph theory-based

- Combinatorial search techniques-based

- Large-scale data sets

- dataviz and high-dimensional data

Choice of methods:

- Cases where clustering is useless

- Clustering, the basic algorithms to know

- 17 clustering algorithms for all use cases

- Introduction to Spectral Clustering

Choice of metrics:

Use case:

Contents

ToggleData partitioning

The process of data partitioning refers to the steps that represent the sequence required for a complete analysis. Implications of decisions taken in each of these areas:

- The entities to be grouped must be selected. The elements must be chosen to be representative of the structure of the clusters in the population.

- The variables to be used in the cluster analysis are selected. Again, the variables must contain enough information to allow grouping of objects.

- The user must decide whether or not to normalize the data. If normalization is to be performed, the user must select a procedure from among several different approaches.

- A measure of similarity or dissimilarity should be selected. These measurements reflect the degree of proximity or separation between objects.

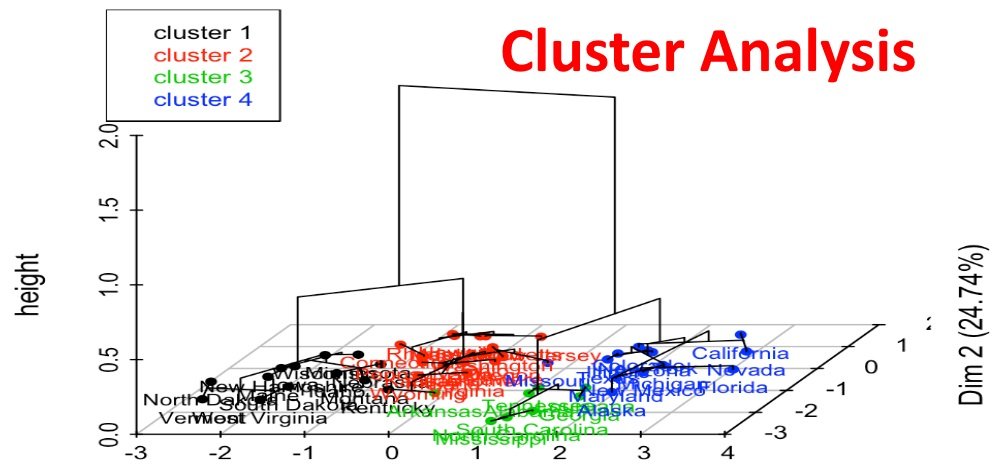

- A clustering method must be selected. The user's concept of what constitutes a cluster is important because different methods have been devised to find different types of cluster structures.

- the number of clusters must be determined.

- The final step in the clustering process is to interpret, test, and replicate the cluster analysis. Interpreting clusters with the context applied requires the user's knowledge and expertise of the particular discipline. The tests involve the problem of determining whether there is a significant clustering or an arbitrary partition of the random noise. Finally, replication determines whether the resulting cluster structure can be replicated in other examples.

Although variations on this seven-phase process may be necessary to suit a particular application, this sequence represents the critical steps in a cluster analysis.

Data partitioning and classification are two fundamental tasks in data mining. Classification is primarily used as a supervised learning method, data partitioning for unsupervised learning (some partitioning models do both). The purpose of data partitioning is descriptive, that of classification is predictive. Since the purpose of data partitioning is to discover a new set of categories, the new groups are interesting in themselves and their evaluation is intrinsic. In classification tasks, however, an important part of the assessment is extrinsic, as the groups must reflect a set of reference classes.

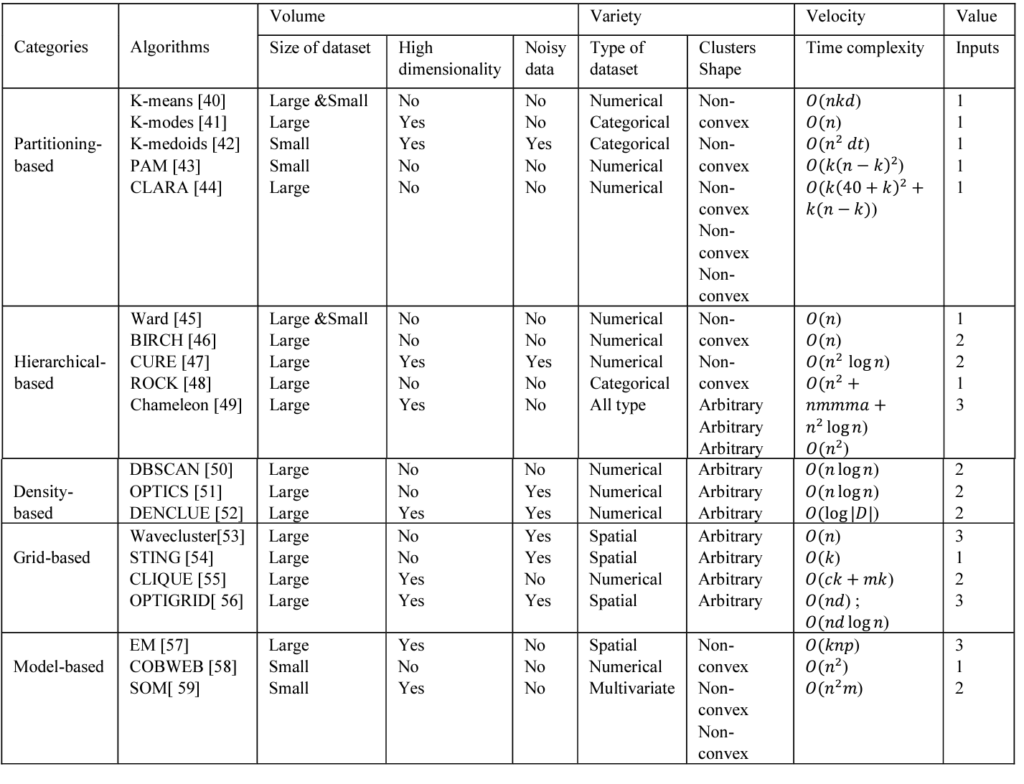

Here is a 4V comparison of the most frequently used algorithms:

The strengths and weaknesses of each category:

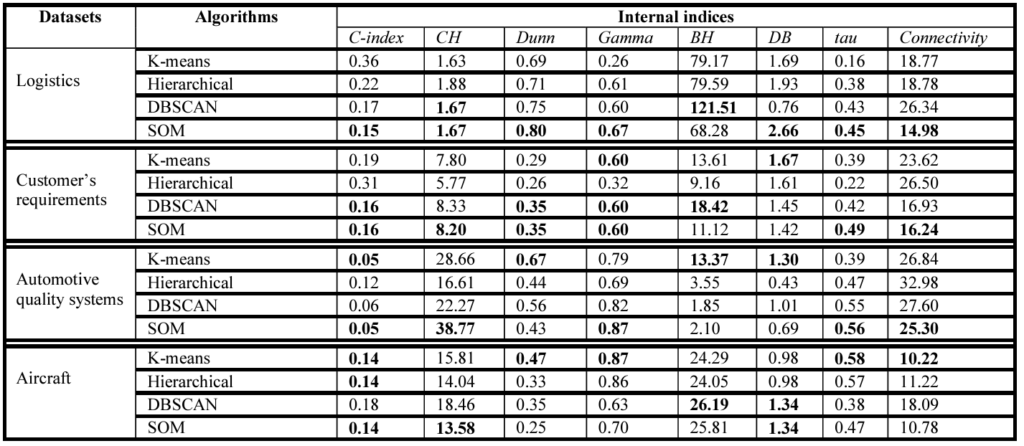

As well as the most common comparison metrics: