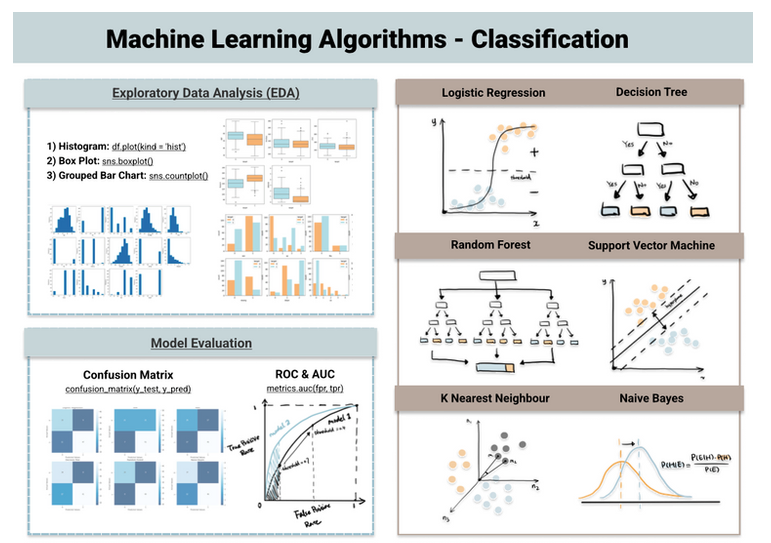

This page presents a pipeline for classification. That is to say the follow-up of a process from the exploration of the data to the classification through the evaluation models.

Contents

ToggleClassification Pipeline Overview

Supervised learning can be subdivided into classification and classification algorithms. regression. The classification model identifies the category an object belongs to, while the regression model predicts continuous output.

Sometimes there is an ambiguous line between classification algorithms and regression algorithms. Many algorithms can be used for both classification and regression, and classification is just a regression model with a threshold applied. When the number is above the threshold, it is classified as true while a lower number is classified as false.

In this article, we will discuss the 6 best machine learning algorithms for classification problems including: Logistic Regression, Decision Tree, Random Forest, Support Vector Machine, k-nearest neighbor and naïve berries. Here is the pipeline for classification.

Logistic regression

Logistic regression uses the sigmoid function above to return the probability of a label. It is widely used when the classification problem is binary, such as true or false, winner or loser, positive or negative, etc.

The sigmoid function generates a probability output. And by comparing the probability with a predefined threshold, the object is assigned a label accordingly.

from sklearn.linear_model import LogisticRegression dtc = DecisionTreeClassifier()

dtc.fit(X_train, y_train)

y_pred = dtc.predict(X_test)Decision tree

Decision tree builds tree branches in a hierarchical approach and each branch can be thought of as an if-else statement. Branches grow by partitioning the dataset into subsets, based on the most important features.

from sklearn.tree import DecisionTreeClassifier reg = LogisticRegression()

register.fit(X_train, y_train)

y_pred = register.predict(X_test)Random forest

As the name suggests, the random forest is a collection of decision trees. This is a common type of ensemble method – which aggregates the results of multiple predictors. Random forest further uses a bagging technique that allows each tree to be trained on a random sample of the original dataset and get the majority tree vote. Compared to decision tree, it has better generalization but is less interpretable due to more layers added to the model.

from sklearn.together import RandomForestClassifier rfc = RandomForestClassifier()

rfc.fit(X_train, y_train)

y_pred = rfc.predict(X_test)Support Vector Machine (SVM)

The support vector machine finds the best way to classify the data based on the position relative to a boundary between positive class and negative class. This boundary is known as the hyperplane which maximizes the distance between data points of different classes. Similar to decision tree and random forest, support vector machine can be used in both classification and regression, SVC (support vector classifier) is chosen for the classification problem.

from sklearn.svm import SVC

svc = SVC()

svc.fit(X_train, y_train)

y_pred = svc.predict(X_test)k nearest neighbor (kNN)

You can think of k nearest neighbor algorithm as representing each data point in an dimensional space – which is defined by n features. And it calculates the distance between one point to another, then assign the label of unobserved data based on the labels of nearest observed data points.

from sklearn.neighbors import KNeighborsClassifier knn = KNeighborsClassifier()

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)naive bayes

Naive Bayes is based on Bayes' Theorem – an approach to calculating conditional probability based on prior knowledge and the naive assumption that each feature is independent of one another. The biggest advantage of Naive Bayes is that although most machine learning algorithms rely on a large amount of training data, it performs relatively well even when the training data size is small. Gaussian Naive Bayes is a type of Naive Bayes classifier that follows the normal distribution.

from sklearn.naive_bayes import GaussianNB gnb = KNeighborsClassifier()

gnb.fit(X_train, y_train)

y_pred = gnb.predict(X_test)Make the pipeline for classification

Loading the dataset and previewing the data

I chose the popular Heart Disease UCI dataset on Kaggle to predict the presence of heart disease based on several health-related factors. The first step in the pipeline for classification is understanding the data.

Use df.info() to get a summary view of the dataset, including data type, missing data, and number of records.

Exploratory Data Analysis (EDA)

Histogram, clustered bar plot and box plot are suitable EDA techniques for classification machine learning algorithms.

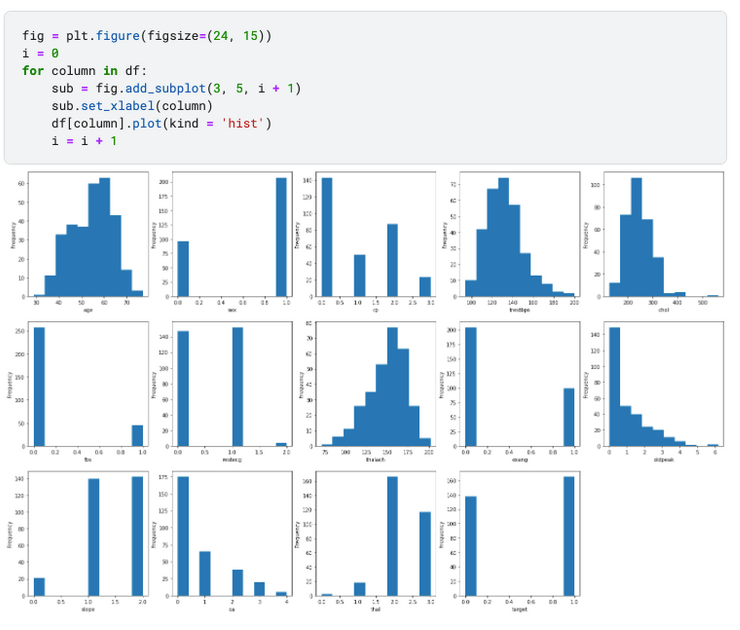

Univariate analysis

To perform univariate analysis, the histogram is used for all features. This is because all features have been encoded as numeric values in the dataset. This saves us time for categorical encoding which usually happens during the feature engineering phase

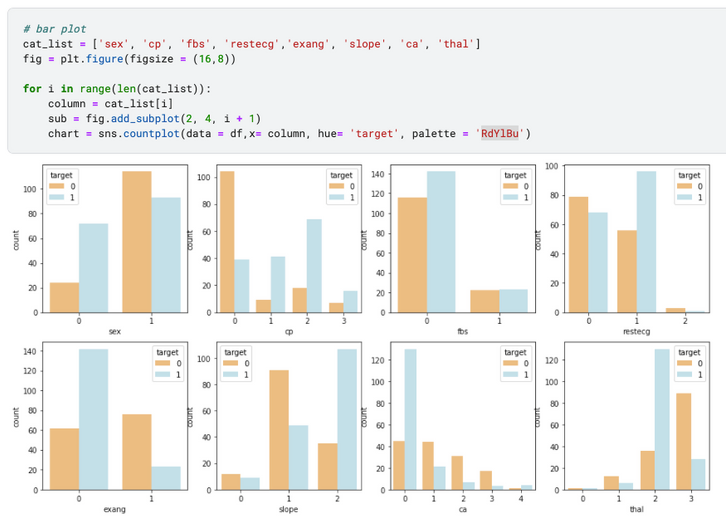

Categorical Characteristics vs. Target – Clustered Bar Chart

To show how the categorical value weighs in determining the target value, the clustered bar chart is a simple representation. For example, sex=1 and sex=0 have a distinct distribution of the target value, indicating that it is likely to contribute more to the prediction of the target. On the contrary, if the target distribution is the same regardless of the categorical characteristics, it means that they are not correlated.

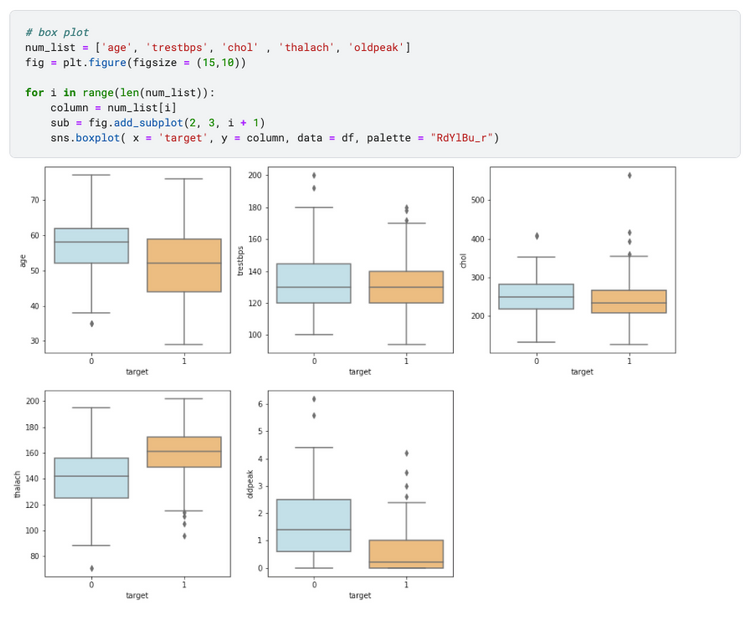

Numerical Features vs. Target – Boxplot

The boxplot shows how the values of the numerical characteristics vary according to the target groups. For example, we can say that 'oldpeak' has a distinct difference when the target is 0 versus the target is 1, suggesting that it is an important predictor. However, 'trestbps' and 'chol' appear to be less remarkable, as the distribution of the boxplot is similar between the target groups.

Dataset separation

The classification algorithm falls under the category of supervised learning, so the dataset should be split into a subset for training and a subset for testing (sometimes also a validation set) . The model is trained on the training set and then examined using the test set.

from sklearn.model_selection import train_test_split

from sklearn import pre-processing

X = df.drop(['target'], axis=1)

y = df["target"]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42)Classification pipeline

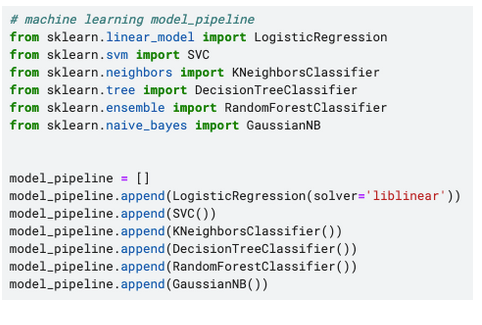

In order to create a pipeline, I add all the 6 main classification algorithms mentioned above in the list of models and I will go through them later to train, test, predict and evaluate.

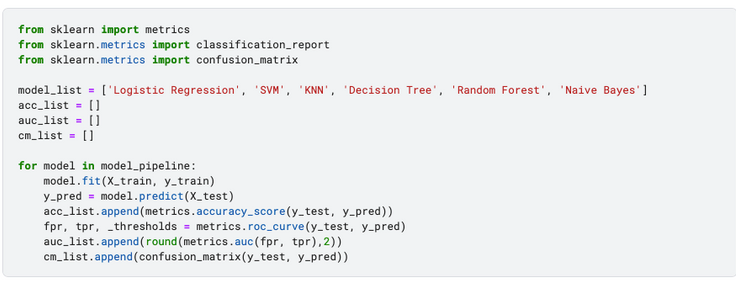

Model evaluation

Here is an abstract explanation of commonly used evaluation methods for classification models – accuracy, ROC and AUC and confusion matrix. The pipeline for classification includes both data processing, models and their evaluation.

Accuracy is the simplest indicator of model performance. It measures the percentage of correct predictions.

The ROC is the graph of the false positive rate against the true positive rate at different classification thresholds. AUC is the area under the ROC curve, and a higher AUC indicates better model performance.

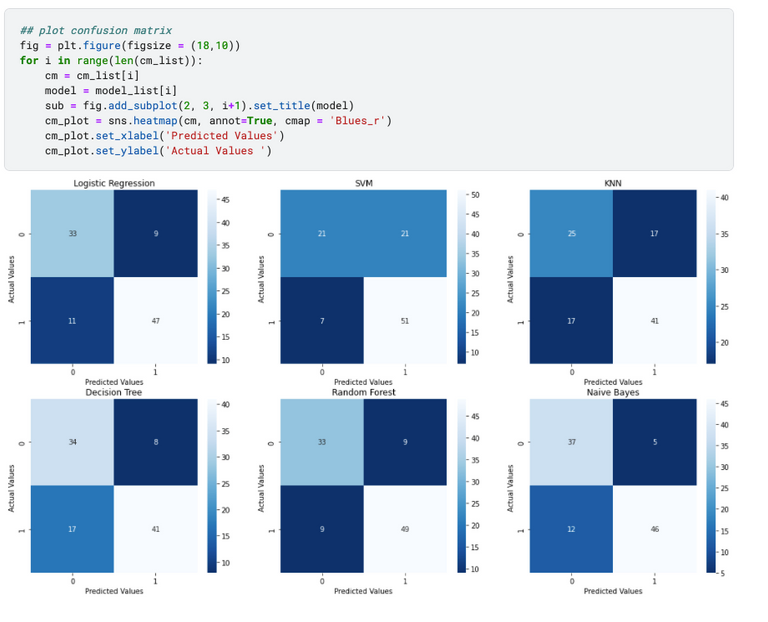

The confusion matrix shows actual versus predicted values and summarizes the true negative, false positive, false negative, and true positive values in a matrix format.

True Negative | False Positive |

False Negative | TruePositive |

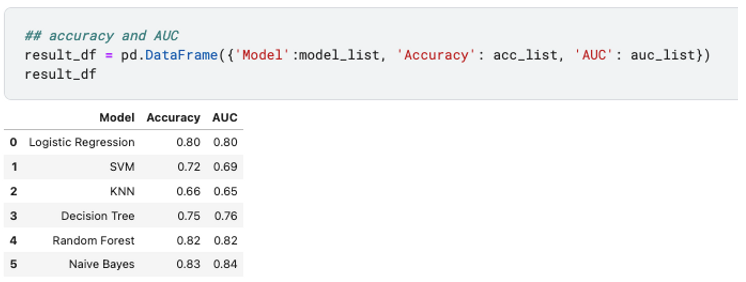

Based on the above three evaluation methods, Random Forests and Naive Arrays perform best while KNN does not perform well. However, this does not mean that random forests and naive arrays are superior algorithms. We can only say that they are more suitable for this dataset where the size is relatively smaller and the data is not at the same scale.

Each algorithm has its own preference and requires different data processing and feature engineering techniques, for example KNN is sensitive to features at different scales and multicollinearity affects the result of logistic regression. Understanding the characteristics of each allows us to balance the trade-off and select the appropriate model based on the data set.

This marks the end of the classification pipeline!