Contents

ToggleTime complexity

The time complexity represents in an asymptotic way the time that a algorithm to find a solution.

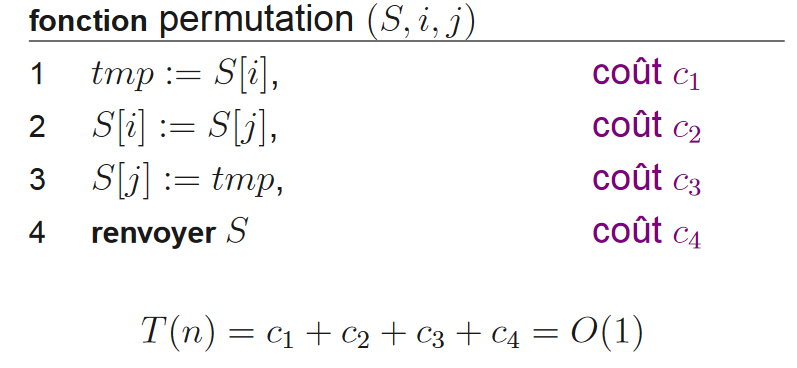

Elementary operation

The complexity consists in evaluating the effectiveness of the method M and comparing the latter with another method M '. This comparison is independent of the environment (machine, system, compiler, language, etc.). Efficiency depends on the number of elementary operations. These depend on the size of the data and the nature of the data.

In the case of a sequence type structure, the evaluation is equal to the sum of the costs. For example, if the algorithm has processing T1 (n) followed by T2 (n), then T (n) = T1 (n) + T2 (n).

In the case of a branch, the evaluation is equal to the maximum of branches. For example, the algorithm executes T1 (n) otherwise T2 (n), then T (n) = max (T1 (n), T2 (n)).

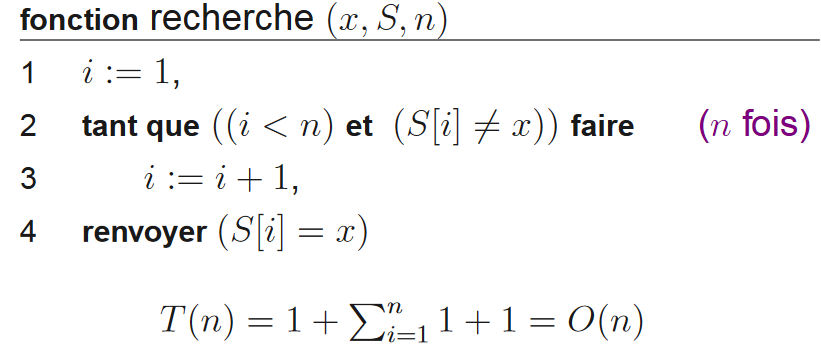

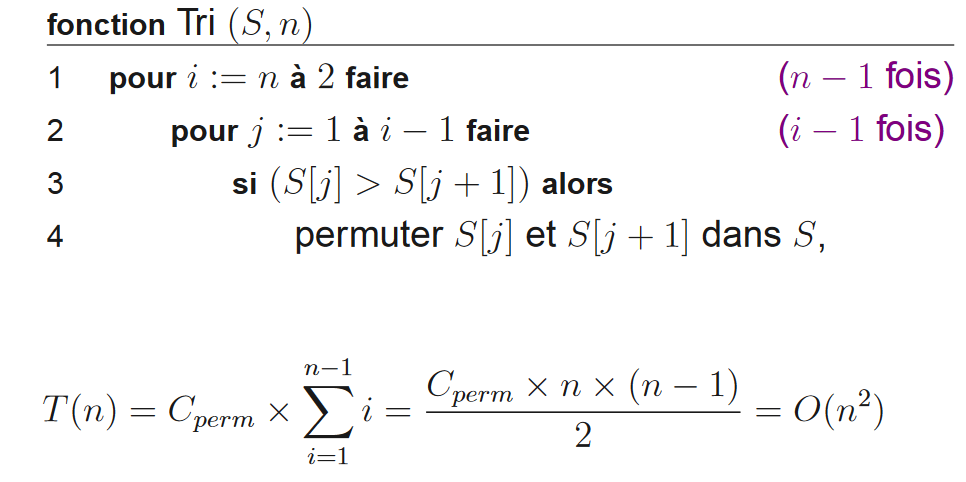

In the case of a loop, the evaluation is equal to the sum of the costs of the successive passages. For example, if the algorithm is a "while doing Ti (n) with the complexity of Ti (n) depending on the number i of the iteration. Then the complexity is T (n) = sum (i = 1 to n) Ti (n).

Landau notation

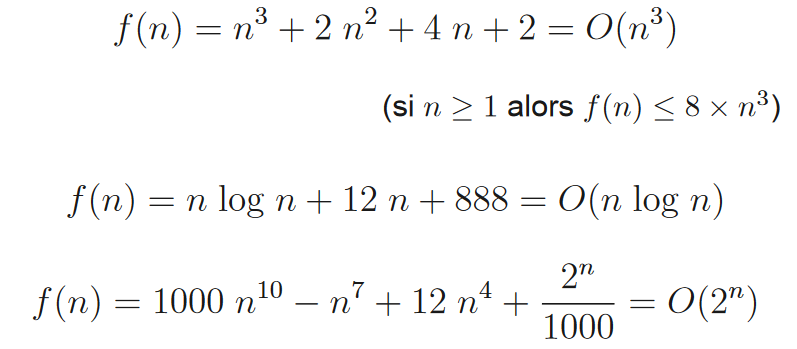

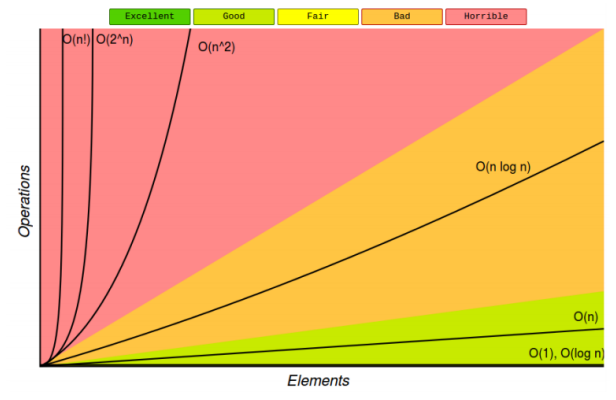

Landau's notation characterizes the asymptotic behavior of a function, ie the behavior of f (n) when n tends to infinity.

.

.

Examples

Worse, Better and Average

Consider an algorithm for finding an element in an array in iterative form. The algorithm stops when the value has been found. The algorithm breaks down as follows: assignment of 0 to i, as long as i has not traversed the array we increment i, if tab [i] = value then we return true, otherwise at the end of the array we return false. Let C denote the complexity of the body of the loop.

In the worst case, the algorithm traverses the whole table, that is to say that T (n) = 1 + n * C = O (n). At best, the element is at the start of the array, so T (n) = 1 + C = O (1). We consider that on average, there is a 50% chance that the element is not in the array, and 50% that it is in the middle of the array (this is of course absurd, but we will not do all the possibilities ). In this case, the complexity on average is T (n) = 0.5 * (1 + n * C) + 0.5 * (1 + n * C / 2) = a * n + b with a and b constants = O (not). We notice that the asymptotic behavior on average is the same as in the worst case.

Examples

Suppose that each of the expressions below gives the processing time T (n) spent by an algorithm to solve a problem of size n. Select the dominant term (s) with the greatest increase in n and specify the lowest Big-Oh complexity of each algorithm.

| Expression | Dominant | O (.) |

| 5 + 0.001n3+ 0.025n | 0.001n3 | We3) |

| 500n + 100n1.5 + 50n log10 not | 100n1.5 | We1.5) |

| 0.3n + 5n1.5 + 2.5 n1.75 | 2.5 n1.75 | We1.75) |

| not2 log2 n + n (log2 not)2 | not2 log2 not | We2 log n) |

| n log3 n + n log2 not | n log3 n, n log2 not | O (n log n) |

| 3 log8 n + log2 log2 log2 not | 3 log8 not | O (log n) |

| 0. 100n + 0.01n2 | 0.01n2 | We2) |

| 0.01n + 100n2 | 100n2 | We2) |

| 2n + n0.5 + 0.5n1.25 | 0.5n1.25 | We1.25) |

| 0.01n log2 n + n (log2 not)2 | n (log2 not)2 | O (n (log n)2) |

| 100n log3 n + n3 + 100n | not3 | We3) |

The instructions below show some characteristics of the “Big-Oh” notation for the functions f ≡ f (n) and g ≡ g (n). Determine if each statement is TRUE or FALSE and correct the formula in the latter case.

| Function | TRUE or FALSE? | After correction |

| O (f + g) = O (f) + O (g) | FALSE | O (f + g) = max {O (f), O (g)} |

| O (f g) = O (f) O (g) | TRUE | |

| if g = O (f) and h = O (f) then g = O (h) | FALSE | if g = O (f) and f = O (h) then g = O (h) |

| 5n + 8n 2 + 100n 3 = O (n 4) | TRUE | |

| 5n + 8n 2 + 100n 3 = O (n 2 log n) | FALSE | We3 ) |