Contents

ToggleAnswer Key Exercises on Hashing in a Database

Hashing is specific to the software used, the following corrected exercises explore the most used techniques.

Introduction to Hashing

The articles of a random file (we also speak of hashed file) are distributed in packages composed of one or more disk pages. A hash function h associates with a key value (discriminatory or not) an integer representing a packet number. All items whose key value is such that the h function associates the same package number are stored together in this package.

When searching on an access key, the h function uniquely determines the packet containing all the articles meeting the search criterion. This function h is rarely injective. Let c1 and c2 be two different keys, we call collision the fact that h(c1) = h(c2).

This collision phenomenon requires on the one hand to verify that each accessed article satisfies the search criterion and on the other hand makes it difficult to equi-distribute the articles in the different packages, complicating the management of the secondary memory. Additionally, the h function rarely preserves the order of keys (c1 h(c1)

The first section of this part is devoted to the study of static hashing organizations in which the number of hash packets is statically fixed at file creation. The unequal distribution of articles in the different packages can cause certain saturated packages to overflow, significantly degrading the performance of search operations and inserting articles into the file.

The second section of this theme presents the organizations by dynamic hashing avoiding this problem at the cost of greater complexity mechanisms implemented.

Static Hashing Exercise

A file organized by static hashing is decomposed into N fixed-size packets when it is created. The associated hash function determines for each access key value a packet number between 0 and N-1. The choice of the hash function is essential to ensure an equi-distribution of articles in the N packages.

This choice must be guided by the rarely uniform distribution of keys in the file. The number of collisions (c1 diff c2 and h(c1) = h(c2)) is greater as the number of hash packets is small compared to the number of articles to be inserted into the file. Collisions will cause certain hash packets to overflow and cause these packets to overflow.

Question 1

What are the criteria for choosing the size of a hash packet? Propose a format for storing articles in a hash packet.

Question 2

Propose different forms of hash functions producing random numbers between 0 and N-1 from an access key.

Question 3

We first assume that the saturated packets overflow into the packets not yet saturated. Suggest different techniques for storing and later finding overflow items. Are these techniques suitable in the case of numerous collisions?

Question 4

We now consider that the file includes an independent overflow zone whose size is fixed when the file is created. The initial N hash packets are called primary zone in order to distinguish them from the overflow zone.

Propose new overflow techniques exploiting this division into two independent zones. What is the contribution of these techniques compared to those introduced in the previous question? Do these techniques completely satisfactorily solve the collision problem? Conclude on the advantages and disadvantages of static organizations.

Question 1

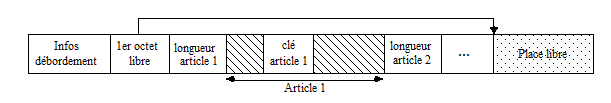

The size of a hash packet must be as large as possible in order to limit overflows in the event of a collision. On the other hand, a packet must be able to be read in a single I/O. The size of a hash packet is therefore limited by the size of a disk page (considered as the I/O unit). The overflow information depends on the strategy used (overflow indicator bit or number of the first overflow packet (see Question 3).

Question 2

Many hash functions are possible to determine a packet number between 0 and N-1 from a key value. The most common are:

– Integer division: This is the simplest and most frequently used function. The result of this function, commonly called module, is the remainder of the integer division of the key by the number of hash packets N. It is recommended to ensure that N is a prime number in order to limit the risk of collisions. For example, a file hashed into 11 packets, key item 35 will be placed in packet number 2 (2 = 35 modulo 11). If the access key is not numeric, the modulo function is applied to the binary representation of this key, considered as an integer.

– Extraction of the square: the key is first squared and then a set of bits (usually the low or medium bits) is extracted from the resulting number to form a packet number. Given that k bits allow 2k packets to be addressed, we will extract log_2 N bits to form a packet number between 0 and N-1.

– Generalized folding: if we consider that a packet number is expressed by p bits (with p = log2N), we divide the key into slices of p bits and we apply a binary “exclusive or” operation between all these slices in order to obtain a new string of p bits identifying a packet. The “exclusive or” operation is preferred to the “and” operation which favors the appearance of many 0s in the result bit string and to the “or” operation which favors the appearance of many 1s.

Question 3

If the packet designated by the hash function is saturated, we must store the insertion article in one of the remaining N-1 packets and be able to find it later. Several overflow techniques are possible:

– Open addressing: suppose that the saturated packet is packet i, this technique consists of sequentially searching for a free place for the article being inserted in the packets i+1, i+2, …, i+k, until finding a location of size sufficient. We store in packet i the number of the first packet in which it overflowed in order to speed up subsequent searches for overflowing items. The search for all articles belonging to package i is carried out as follows.

The articles stored in package i are first read sequentially by checking for each of them that it really belongs to package i (a key comparison makes it possible to eliminate articles from other packages possibly stored overflowing in the package i). The items in packet i stored in overflow are then searched sequentially in subsequent packets starting from the first overflow packet.

– Overflow chaining: this technique is identical to open addressing but all the articles belonging to the same packet are chained together here. This chaining avoids the cost of a sequential search to find all the articles of the same package stored in overflow. On the other hand, in addition to the additional storage cost linked to chaining, this technique can generate numerous I/Os if the chain of articles is long and if the chaining frequently passes from one package to another (we may then have to read the same packet several times). In the worst case, the chaining traversal may generate an I/O to retrieve each item (see Chapter 2, question 4).

– Address table of overflow items: This technique, very similar to the overflow chaining technique, stores the addresses of all overflow items in a table associated with each packet. The addresses stored in this table can be kept sorted in order to avoid multiple readings of the same packet. This technique, however, poses the problem of the space occupied and the static size of each of these tables.

– Re-hash: if packet i overflows, we store in the packet the fact that it has overflowed and we apply a new hash function to the articles to be inserted into this packet. This second function generates a packet number different from i and between 0 and N-1. The search for all articles belonging to package i is carried out as follows.

The articles stored in package i are first read sequentially, checking for each of them that it really belongs to package i (in order to eliminate articles from other packages possibly stored overflowing in package i). The articles of packet i stored in overflow are then searched in the packet identified by the second hash function applied to the access key.

These techniques offer a response to the problem of managing overflowing articles but none is really satisfactory if the collision rate is high. Performance degrades very quickly when searching for overflowing articles. This phenomenon is due to the fact that the overflowing articles of a package i are stored instead of the articles of a package j, quickly causing an overflow of the package j by a chain reaction.

Question 4

The overflow techniques presented in question 3 remain applicable, with a few modifications, to a file with an independent overflow zone. In the case of theopen addressing, the first free location allowing an overflow item to be stored is no longer sought in the following primary hash packets but sequentially in the overflow zone. The techniques of overflow chaining and of address table of overflowing articles remain unchanged but the chaining links correspond to addresses in the overflow zone.

Finally, the technique of re-hash requires splitting the overflow zone into P hash packets. Thus, when inserting an article into the file, we first apply an integer division by N to its key in order to determine a primary package number. If this primary packet is saturated, an integer division by P is applied to this same key in order to determine a secondary packet number. If this secondary packet is saturated in turn, then we use one of the techniques presented in question 3 in the overflow zone.

The advantage of separating the primary zone from the overflow zone is to avoid the chain reaction phenomenon due to the saturation of certain packets generated by the overflow of other packets (see question 3). Here, the overflow of a primary packet does not influence the occupancy rate of other primary packets. This management of overflows is therefore more robust in the face of a high rate of collisions. However, searching for items in overflow areas remains a costly operation.

Conclusion: the major advantage of static random organizations is their simplicity of implementation and the excellent performance they offer as long as packet overflows are few. Indeed, if no packet has overflowed, static random organizations guarantee the search for all items with a given key value in a single I/O. On the other hand, overflows quickly cause a collapse in performance regardless of the overflow management technique used. This problem requires periodic reorganization of files as soon as the overflow rate exceeds a given tolerance threshold.

Dynamic Hashing Exercise

The objective of dynamic hashing organizations is to minimize the cost of collisions by replacing overflow techniques with a dynamic increase in file size. Many dynamic hashing strategies have been proposed in the literature: extensible hashing [Fagin79], linear hashing [Litwin80] and trie-hashing [Litwin81], to name only the best known.

Each of these strategies constitutes an adaptation of a common principle: the progressive exploitation of the contents of the access key. Assume an access key whose hash produces a k-bit string. Hashing such a key makes it possible to address at most 2^k packets. The hashed file is initially created with N packets (N < 2^k) and the articles are distributed into these packets using only the first p bits resulting from the hash of their key (N=2^p and p < k).

In the event of saturation, a packet is split and the articles it contains are divided into two packets by exploiting the value of the (p + 1)th bit resulting from the hash of their key. Dynamic hashing methods differ depending on the strategy used to choose the packet to burst at each saturation.

Question 5

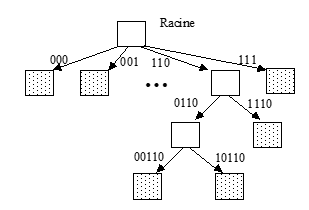

In the extensible hashing method [Fagin79], a packet is split into two as soon as it becomes saturated. We can represent the state of a file at a time t by a tree bursting whose nodes correspond to the packets and whose arcs materialize the successive burstings of packets. The root of this tree has 2p children representing the initial 2p packets.

Each node corresponding to a burst packet is in turn the father of two children representing the two packets resulting from the burst. The leaves of the tree therefore correspond to the packets actually occupied by the file at a time t while the internal nodes correspond to packets having existed at times t-1, ..., tn. Each arc of the bursting tree is valued by the signature of the package it references.

We call the signature of a packet the value of the bit string shared by the keys of all the items stored in this packet. Thus, the arcs starting from the root are valued by signatures of p bits having the respective value: 0…00, 0…01, up to 1…11. By induction, the arcs corresponding to level n of the tree are valued by signatures of p+n-1 bits.

We consider a hashed file with 8 initial packets numbered from 0 to 7. Packet number 6 has split into two child packets, the left child has split in turn. Represent the splitting tree of this file by specifying the valuations of the arcs and the packages occupied by the file.

Question 6

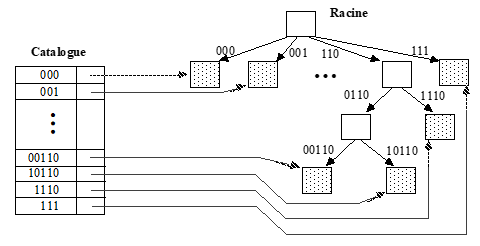

The burst tree must be stored (in some form) in a system data structure called catalog. The role of the catalog is to quickly determine the address of the package in which a new item must be inserted as well as the address of the package to be read during a key search.

Propose an organization of the catalog in the form of a table. Illustrate this organization of the catalog in relation to the previous explosion tree.

Assuming that the hash function used is modulo, indicate the search principle in the catalog allowing you to find all the articles in the file whose key value is 54, then all those whose key value is 65.

Question 7

When the file is very large, the catalog may no longer fit in memory. Propose an adaptation of the previous structure which always allows the address of a packet to be found (during an insertion or a search) in one I/O.

Indicate the principle of updating the catalog when packages are split. Give the different versions of the catalog, when the file is created and then after each of the two splits considered in question 5. How many entries reference package 000 and what is their respective signature?

How do we now find key 54 and key 65 articles?

Question 8

Does a file organized using the dynamic hash method have a size limit? If yes, specify which one, if not, specify why.

Question 9

The linear hashing method [Litwin80] is a dynamic hashing method avoiding the management of a catalog. Rather than bursting a packet upon saturation as in extensible hashing, packets are burst in a predefined order (hence the name linear). The file is composed of N initial packets, numbered from 0 to N–1. The items are placed in the initial packets by application of the hash function h0(key) = key modulo N. When a first packet overflows (for example: packet i), an empty packet of number N is dynamically added to the end of the file and packet 0 (and not packet i) is split into two by application of the function h1(key) = key modulo 2N.

If the distribution is uniform, half of the items in package 0 will be moved to package N and the other items will remain in package 0. The item that caused the overflow of package i is chained into overflow using a classic technique (e.g. : open addressing). When another packet j overflows, a new packet with number N+1 is added at the end of the file and packet 1 is exploded with the h1 function. By proceeding in this way linearly, packets i and j will sooner or later be split in turn. The h1 function can be used until the file has doubled in size (all packets from 0 to N-1 have then been split).

Each time the file size doubles, the bursts start again from packet 0 and the hash function hk-1 is replaced by the function hk(key) = key modulo (2^k N), where k represents the number of times the file has doubled in size (k is called file level).

At a time t, two hash functions are sufficient to manage linear hashing. What is the rule for determining which hash function to use when searching or inserting an item into the file? What system information must be maintained to enable the implementation of this rule?

Question 10

Give the algorithm corresponding to the rule stated in the previous question

Question 5

The explosion tree corresponding to the described file is shown in Figure 5.2. The grayed packets correspond to the packets occupied by the file at time t. It can be noted that the signature bit string is expanded from right to left. This makes it possible to prioritize the low-order bits (whose distribution is generally more random) of the hash keys.

Figure. Tree bursting valued by packet signatures

Question 6

The catalog is materialized by a table ensuring the correspondence between signature and package address. This table contains an entry for each packet occupied by the file at a time t, as illustrated in Figure 5.3.

Figure. Catalog corresponding to the burst tree of question 6

To search for all articles with a given key, simply find the entry in the catalog whose signature corresponds to a prefix of the bit string resulting from the hash of the key sought. For example, searching for articles with a key of 54 is done as follows. At most five bits are currently used in packet signatures, we apply the modulo 2 hash function5 at key 54. The result of the hash is therefore 22 (54 mod 32).

In binary, 22 is written 10110. We therefore look for the signature 10110 in the catalog in order to find the address of the corresponding packet. The same goes for finding key articles 65. 65 modulo 25 gives 1, or in 5-bit binary 00001. Entry 001 in the catalog will be selected because it is the only entry corresponding to a prefix of 00001.

Question 7

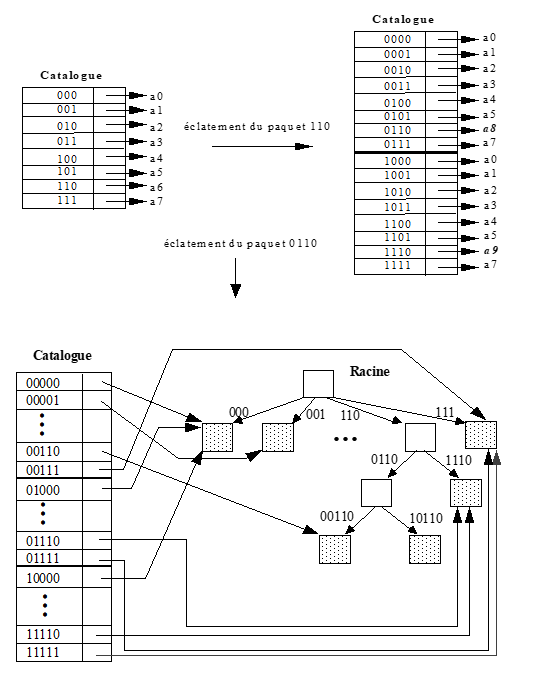

The structure proposed in question 7 requires a sequential reading of the catalog. To overcome this drawback, we modify the principle of updating the catalog. As long as there has not been a packet burst, the catalog contains p-bit signatures addressing the initial 2p packets. When one of these packets is first burst, the size of the catalog is doubled and an additional bit of each signature is expanded so that all entries in the catalog contain signatures of p+1 bits.

The two packets resulting from the split are then each referenced by a distinct signature of p+1 bits. A further splitting of one of these two packages will again result in a doubling of the catalog. On the other hand, the packets which have not burst are referenced by two signatures of p+1 bits whose first p bits are identical. If one of these packets bursts in turn, doubling the catalog is not useful since we already have two signatures of p+1 bits to address the two packets resulting from the bursting.

In this case, only the packet address associated with the two signatures must be updated. Figure 5.4 shows the evolution of the catalog after the bursting of package 110 then package 0110. In this figure, have represents the address of the packet i.

The advantage of this principle is twofold. On the one hand, since duplication of the catalog is rare, splitting a packet most often requires a simple update of the addresses associated with the signatures. On the other hand, all signatures have an identical number of developed bits. Thus, if the catalog is large and occupies several pages, it is possible to determine by calculation on which page the desired signature(s) are found. Only one I/O is therefore required to search the catalog, regardless of its size.

Figure. Stages of catalog evolution

The initial packet 000 is now referenced by four entries containing the same physical address but whose respective signatures are: 00000, 01000, 10000, 11000. We notice that these four signatures have the same profile xx000.

The search for key articles 54 is carried out as in question 7, namely that we select the signature catalog entry 10110. The search for key articles 65 amounts to selecting the signature entry 00001 which, in the present case, references the same packet as the signature entries: 01001, 10001 and 11001. Indeed, the initial packet 001 not having burst, it is referenced by all the entries whose signature profile is 001.

Note: The catalog entry containing the 10110 signature is entry 22 (10110 in binary). Therefore, it is not useful to store signatures in this type of catalog because this information is redundant with the number of each entry.

Question 8

When the signature of a packet reaches the total number of bits of the bit string resulting from the hash of the key, this packet can no longer be split since all the bits of the keys of the articles it contains have been used. In this case the file is full. It is however possible to chain an overflow packet to each saturated packet in order to allow their extension. In this case, the file no longer has a theoretical size limit.

Question 9

In order to determine the number of the package in which an article must be searched or inserted, it is necessary to know the level k of the file and to know whether the package in question has already been split or not, in order to determine whether we must use the hk or hk+1 hash function. To do this, we store in the file descriptor: the number of times the file has doubled in size (level k of the file) and the position of the current burst pointer referencing the next packet to burst.

By knowing the level k of the file, we apply the hash function hk to the key of the article to be searched for or inserted. If the packet number determined by this hash function is less than the current burst pointer, then the corresponding packet has already been burst and we re-hash the article key with the hk+1 function. Otherwise, the packet designated by the hk function can be directly exploited.

Question 10

The algorithm determining which hash function to use, when searching or inserting an item into the file, takes as input the file descriptor, the item key and outputs the packet number in which this article should be searched or inserted. The hash function used in this algorithm is h (k, key) = key modulo (2k N), where the parameter k corresponds to the file level.

function Calculation_packet_number (file_description: descriptor, key:key_type): integer;

var numpack, k: int;

beginning

k := file_description.k; // file level

packagenum := h (k, key);

if (package number < file_descrip.ptflare) so Calculation_packet# := h (k+1, key);

otherwise Calculation_package# := package#;

end

The algorithm for inserting a new article into a file organized using the linear hash method is shown below. The Calculation_packet# function used in this algorithm is the one presented in question 10. The Write(article, package#) function writes the article “article” in the package#packet or the overflow string if this packet is saturated.

procedure Insert(file_description: descriptor, key: key_type, article: art_type)

var

numpack, nvnumpacket: int;

k: int; // file level

ptburst: int; // current burst pointer

beginning

k := file_description.k;

ptflare := descrip_file.ptflare; package_num := Calculation_package_number (file_description, key);

if (numpacket is full) so

// burst the ptburst number packet into the

// packages ptespace and (ptespace + 2k N)

for each articlei Î ptsparkle to do

nvnumpacket := h (k+1, itemi.key);

Write(article, nvnumpacket);

ffor

if (numpacket = ptshard) so

// determine if the new article should be inserted

// in the ptespace package or (ptespace + 2k N)

packagenum := h (k+1, item.key);

fsi

// increment the current burst pointer

if (ptburst = (2k N) – 1)

so // file level update

k := k + 1;

ptburst := 0;

otherwise ptshard := ptshard + 1;

// file descriptor updated

descrip_file.k := k;

descrip_file.ptshard := ptshard;

fsi

fsi

// write the article in the numpaquet package

Write (article, numpack);

END

Multi-criteria hashing exercise

In the field of databases, article searches are generally carried out on multiple criteria (example: search for cars with power greater than 10 hp and Citroën brand). The file organizations studied in Chapters 2 and 3 favor the processing of the most frequently used search criterion on a file, by ordering the articles on disk according to this criterion. To efficiently process multi-criteria searches, it is possible to adapt the majority of file organizations in order to involve several attributes in the placement policy.

A second solution consists of using an additional data structure, called secondary index. A secondary index constitutes a logical reordering of the articles of a file according to an access key (discriminatory or not) different from the placing key on which the organization of the file is based. When searching using an access key, scanning the secondary index provides the identifiers of all articles satisfying the search criterion.

These articles are then retrieved one by one via their identifiers, at the rate of one I/O per article. Creating a secondary index introduces a significant storage cost. In addition, any update of the data file causes all secondary indexes relating to this file to be updated. The use of secondary indexes must therefore be restricted to frequent search criteria.

Question 1

In the static hashing method studied previously, a packet number is determined by the value of a bit string resulting from the hashing of the value of a single attribute. To extend this method to multi-attribute hashing, the bit string is obtained by respectively concatenating b1, b2, … bn bits resulting from the hashing of the attributes A1, A2, …, An by the hash functions h1, h2, …, hn .

a) Give the principle of searching for all the articles in the file satisfying the condition Ai = .

b) Calculate the number of I/Os needed (number of packets accessed) to perform the previous search assuming no overflow.

c) A search with the condition (Ai = and Aj= ) generates more or less I/O than the previous search? Same question with the condition (Ai = or Aj= ).

Question 2

Many multi-attribute dynamic hashing methods have been proposed, including: grid-file [Nivergelt81], multi-attribute linear hashing [Ouksel83] and predicate trees [Gardarin84]. Identical to multi-attribute static hashing, the bit string used to determine a packet number is composed of the bits resulting from the hashing of the attributes A1, A2, …, An by the hash functions h1, h2, …, hn.

However, multi-attribute dynamic hashing methods differ depending on the order of the bits coming from each hash function hi(Ai) in this bit string (for example, the bits associated with an attribute Ai are not necessarily contiguous in the chain). As in single-attribute dynamic hashing, this bit string is expanded bit by bit as packets are burst.

Give the principle of searching for articles satisfying the condition Ai = .

Question 3

Assume a file dynamically hashed on attributes A1, A2 and A3. In what order should the bits resulting from the hash functions h1(A1), h2(A2) and h3(A3) be arranged in the bit string determining a packet number in order to favor access to the A2 attribute, then to attribute A3 then finally attribute A1 in this order of priority?

Question 4

The grid-file method allows each of the placement attributes to be treated equitably. Let's assume a file hashed on attributes A1, A2 and A3. In what order should the bits from the hash functions h1(A1), h2(A2) and h3(A3) be arranged in the bit string determining a packet number in order to ensure this fairness?

Question 1

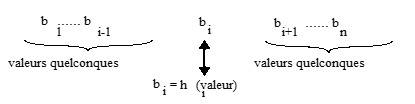

a) The N packets of a file hashed using a static multi-attribute method are identified by strings of p bits (where p=log_2 N) constructed by concatenation of b1, b2, …, bn bits (where b1+b2+ … +bn = p) associated respectively with the attributes A1, A2, …, An. The search for all the articles in the file satisfying the condition Ai = requires the reading of all the packets whose numbers are identified by a string of p bits in which the values of the bi bits associated with Ai correspond to the values of the bi bits resulting from the hash of the value sought by the hi function. O

n therefore selects all the packets whose numbers have the form shown in figure 6.1. These packets are then scanned sequentially to retrieve all items satisfying the search criterion.

Figure. Signing selected packages

b) If there was no overflow, the number of I/Os generated by such a search corresponds to the number of packets whose numbers have the form cited above. Since the file contains 2ppackets (2p= number of possible combinations of p bits), the number of selected packets (number of I/Os) is equal to 2^(p-bi), i.e. the number of possible combinations of p-bi bits since bi bits are fixed by the search criterion.

c) Following the same reasoning, the number of I/Os generated by a search with the condition (Ai = and Aj= ) is equal to 2^(p-bi-bj). This cost is therefore lower than the cost calculated in b). The number of I/Os generated by a search with the condition (Ai = or Aj= ) is equal to 2^(p-bi) + 2^(p-bj).

Question 2

Identical to multi-attribute static hashing, searching for all articles in the file satisfying the condition Ai = requires the reading of all packets whose numbers are identified by a string of bits in which the values of the bi bits associated with Ai correspond to the values of the bi bits resulting from the hash of the value sought by the hi function. The difference is that the packets are created dynamically here and the packet signatures are developed as they are burst.

We therefore start by constructing a string of bits, called a signature profile, in which only the values of the bi bits associated with Ai are entered. This signature profile is then used to filter the catalog in order to select all the signatures whose values of the bi bits of Ai correspond to those of the signature profile, independently of the values of the other bits of the signature. If certain bits of the signature profile correspond to bits not yet developed in a signature, the comparison is applied only to the developed bits.

Hashing the desired value 12 by hi gives 3, or 11 in binary. The catalog filtering will therefore be carried out with the profile ??1?1 (where ? symbolizes an unknown bit value). This filtering will make it possible to select the 2^(5-2) catalog entries containing the signatures 00101, 00111, 10101, 10111, 01101, 01111, 11101 and 11111. The resulting number of I/Os is simply 2 because the set of these signatures reference two initial packets 101 and 111 which never burst.

Question 3

In a static placement method, the ordering of bits in a packet's signature does not matter. On the other hand, in a dynamic placement method, the bursting of packets is done by exploiting the signature bits one after the other. Only the used bits of the signature participate in the placement, the other bits remaining insignificant during searches. Favoring access to an attribute therefore amounts to placing the bits associated with it at the start of the signature (i.e. on the right) in such a way that they become significant from the first bursts.

Consider the following hash functions h1(A1), h2(A2), h3(A3):

h1(A1) = i^1 i^2 i^3 … i^n where i^q corresponds to the qth bit of h1(A1)

h^2(A2) = j^1 j^2 j^3 … j^n where j^q corresponds to the qth bit of h2(A2)

h3(A3) = k^1 k^2 k^3 … k^n where k^q corresponds to the qth bit of h3(A3)

Favoring access to attribute A2, then to attribute A3 then finally to attribute A1 in this order of priority amounts to placing the bits from the hash functions h1(A1), h2(A2) and h3(A3 ) in the following order: i^1 i^2 i^3 … in k^1 k^2 k^3 … k^nj^1 j^2 j^3 … j^n. In this example, the placement on attribute A1 will only become effective when the file has reached a large size, after a significant number of packet bursts.

Question 4

In order to treat each placement attribute fairly, the grid–file method mixes the bits from each hash function in the bit string in order to exploit a bit of a different attribute each time a packet is burst. .

In the example of the file hashed on the attributes A1, A2 and A3, the grid–file method will exploit a bit of h1(A1) during the first bursting of a packet, then a bit of h2(A2) on the second bursting of this packet, then a bit of h3(A3) at the third bursting then again a bit of h1(A1) on the fourth bursting and so on, rotating cyclically on each of the attributes.

This fairness is therefore obtained by placing the bits from the hash functions h1(A1), h2(A2) and h3(A3) in the following order: k^1 j^1 i^1 k^2 j^2 i ^2 … k^nj^ni^n, where i^q, j^q, k^q have the same meaning as in question 5.