Contents

ToggleCorrected exercises on time complexity

The following corrected exercises relate to the analysis of algorithms, in particular accuracy, exhaustiveness and the calculation of time complexity.

Exercise 1

Determine the time complexity of the Check algorithm:

Before calculating the complexity, it must be checked whether the algorithm terminates. The elements of the array are integers, so can exceed the value of 9, which means that tab[value-1] falls out of the size of the array. The algorithm is therefore not viable and useless to continue the analysis further.

Exercise 2

Analyze the complexity of the algorithm. Write another algorithm which does exactly the same as Algorithm but with strictly better asymptotic time complexity.

The algorithm is really complicated to understand. The easiest way is to test its operation with a small vector such as <3, 5, 4, 4, 5, 7>. I let you test step by step on paper.

In the loop, when two boxes are identical, we increment the value of c. Given the sequential content in the loop, i remains stable while j evolves. So we count the number of occurrences in the table of the value of box i. The second branch gives a condition when j reaches sort of the size of the vector. In this case, it is verified that the number of occurrences of the value of box i exceeds the last count recorded in m. Then we increment i and place j on the same box.

This does not change the operation of finding the number of occurrences of each box because if the value was before, it has already been counted in its entirety. Precisely, starting i and j at the same place produces less calculation.

If we resume the operation, i and j are on the same box. j increments to the end of the array. Then i move forward one space and j move to the same place. The number of iterations is therefore the sum of n (size of the array) to 1, ie n(n-1)/2. Both branches have the same complexity in 0(1), so the complexity of the algorithm is O(n²).

To make it simpler, it suffices to carry out the algorithm in two stages. First, we sort the table (possible in O(n log(n)). Then we read the table by two boxes tab[i] and tab[i+1]. As long as the values are equal, we increment a counter c. If it is different, we look if c>m as the proposed algorithm. The complexity is therefore O(n log(n) + n), or O(n log(n)).

Exercise 3

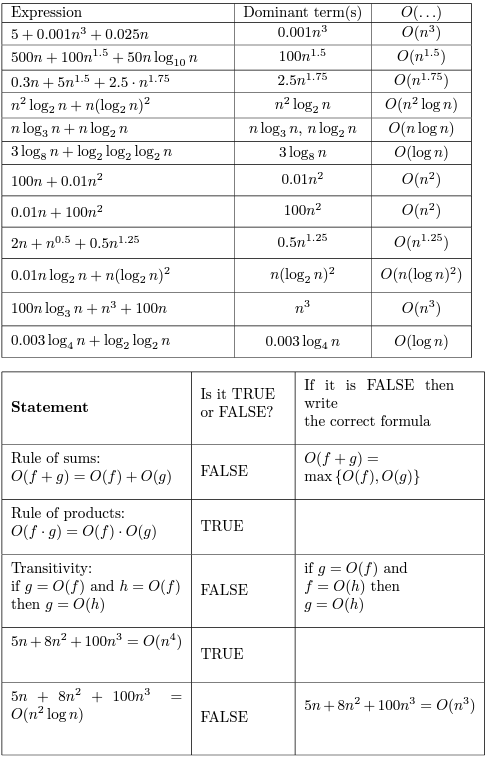

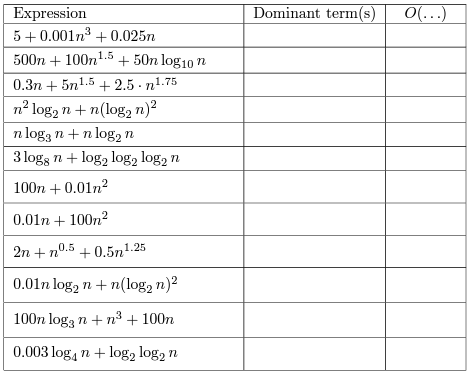

Complete the two tables below:

Exercise 4

What is the (asymptotic) running time of each of the following algorithms, as a function of n? Justify your answers.

To)

for i = 1 to n do

for j = 1 to 2n + 1 do

print (“Hello World”)

end for

end for

b)

for i = 1 to 10 do

for j = 1 to n do

print (“Hello World”)

end for

end for

vs)

for i = 1 to n do

for j = i to n do

print (“Hello World”)

end for

end for

d)

for i = 1 to n do

for j = 1 to 2 ∗ i + 1 do

print (“Hello World”)

end for

end for

e)

for i = 1 to n ∗ n do

for j = 1 to i do

print (“Hello World”)

end for

end for

f)

for i = 0 to m do

t ← 1

while (t <m) do

print (“Hello world”)

t ← t ∗ 2

end while

end for

a) O(n²). Here the first loop does (n-1) iterations and the second loop 2n iterations, so a total of (n-1)*2n.

Well). Here the first loop makes 10 iterations and the second loop n iterations, so a total of 10n. Attention, according to certain calculation that would make 9 iterations followed by n-1 iterations. Fortunately, in the Landau notation it doesn't change anything, so there's no need to quibble about the iteration count!

c) O(n²). Very classic, each loop makes n-1 iterations.

d) O(n²). The first loop is classic. The second goes from 1 to 2i+1, so if we do a few examples: 3, 5, 7, …, 2n+1 (here we run the two loops at the same time). We therefore have the sum of the first n odd terms, therefore quadratic.

It is also possible to calculate the complexity by multiplying the complexity of each of the loops. The first loop tends to n iterations when n is large; the second loop tends to n iterations when n is large. So we have a complexity of O(n*n)=O(n²).

Be careful to check the number of iterations when n is large, it will not always be in O(n)!

e) O(n^4). The reflection is the same as for algorithm d. Here we have the first n terms squared.

f) O(m log(m)). For the first loop everything is fine, the problem will come from the second. Here the value of t doubles at each iteration and the loop stops when t exceeds the value of m. Let k be the number of iterations performed. We stop when 2^k >m so when k > log(m). Hence the complexity.

Exercise 5

Let TTO(n), TB(n) and TVS(n) the execution times of algorithms A, B and C, respectively.

Instruction A

if (n <100) then

Instruction B

else

for (j = 1 to n) do

Instruction C

end for

end if

What is the running time of this algorithm, under each of the following sets of assumptions? Justify your answers.

- TTO(n) = O (n), TB(n) = O (n2) and TVS(n) = O (log n).

- TTO(n) = O (n2), TB(n) = O (n2) and TVS(n) = O (log n).

- TTO(n) = O (n2), TB(n) = O (n3) and TVS(n) = O (log n).

Since large values of n determine the asymptotic running time, we can neglect the if part of the if statement. Therefore, in all cases, the running time of this algorithm is O(TA(n) + nTC(n)).

- O (n log n)

- O(n²)

- O(n²)

If you have any doubts, you can also use the classic formula in the case of branching 0(max(T_branches)). In this case, we would have had O(TA(n) + max(TC(n), nTC(n)) ).

Exercise 6

What is the worst-case complexity of each of the following code fragments?

Two loops in a row:

- for (i = 0; i <N; i ++) {

- sequence of statements}

- for (j = 0; j <M; j ++) {

- sequence of statements}

How would the complexity change if the second loop went to N instead of M?

A nested loop followed by a non-nested loop:

- for (i = 0; i <N; i ++) {

- for (j = 0; j <N; j ++) {

- sequence of statements

- }}

- for (k = 0; k <N; k ++) {

- sequence of statements}

A nested loop in which the number of executions of the inner loop depends on the value of the index of the outer loop:

- for (i = 0; i <N; i ++) {

- for (j = N; j > i; j–) {

- sequence of statements

- }}

- The first loop is O(N) and the second loop is O(M). Since you don't know which is bigger, you say it's O(N+M). It can also be written O(max(N,M)). In the case where the second loop goes to N instead of M the complexity is O(N). You can see this from either of the expressions above. O(N+M) becomes O(2N) and when you remove the constant it's O(N). O(max(N,M)) becomes O(max(N,N)) which is O(N).

- The first set of nested loops is O(N²) and the second loop is O(N). It is O(N²+N) which is O(N²).

- This is very similar to our previous example of a nested loop where the number of iterations of the inner loop depends on the index value of the outer loop. The only difference is that in this example, the inner loop index counts down from N to i+1. It is always the case that the inner loop executes N times, then N-1, then N-2, etc., so the total number of times the innermost "statement sequence" executes is O(N²).

Exercise 7

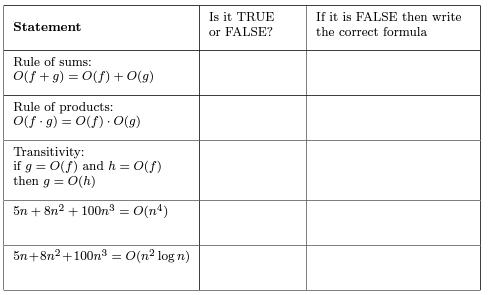

Give a running time analysis (Big-Oh notation) for each of the following 4 program fragments. Note that the execution time here corresponds to the number of times the sum++ operation is executed. sqrt is the function that returns the square root of a given number.

- If it takes 10ms to execute program (b) for n=100, how long will it take to execute n=400?

- If it takes 10 ms to run program (a) for n=100, what size problem can be solved in 40 ms?

A is in O(sqrt(n)) it is a sequential sequence of loops, we have 0(sqrt(n)/2 + sqrt(n)/4 + sqrt(n)/4 +8)

B does not end, the second loop is not well written! Considering the loop written properly, we would have had three interdependent loops bounded at 0(sqrt(n)), 0(1) and O(1) — from i to i+8; from d to d+8 that makes only 8 iterations. Which is 0(sqrt(n)).

c is in O(n^5). Three nested loops of respective complexity 0(n), 0(n²), 0(n²) therefore 0(n*n²*n²).

d has a branching condition which is not valid because it does not return a boolean, the algorithm does not terminate so no need to calculate the complexity.

With cross product: sqrt(100)=x and y=10 ms; sqrt(400)=2x takes 20ms

In 40ms, n=1600.