Contents

ToggleHuffman coding

Huffman coding is a widely used method in data compression. It is used to encode a text in binary, using for each letter a number of bits depending on the number of times the letter is present: the more the letter appears, the smaller the number of bits. Thus, the total number of bits used to encode the text is reduced compared to standard ASCII encoding which uses eight bits for each letter.

When it comes to transmitting information on a non-noisy channel, the priority objective is to minimize the size of the representation of the information: this is the problem of data compression. Huffman's code (1952) is an optimal variable length code, that is to say such that the average length of a coded text is minimal. Size reductions of the order of 20 to 90% are thus observed.

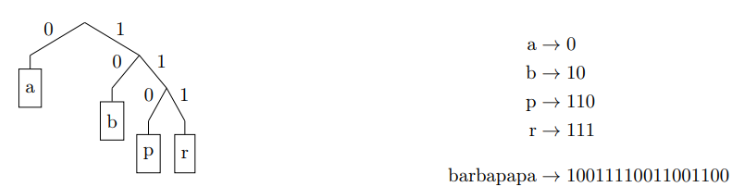

The binary code associated with each letter is defined by a binary tree. The leaves of the tree correspond to the letters of the alphabet. Internal nodes contain no information. The path taken to reach a leaf from the root defines the code of the letter on this leaf: left 0, right 1. For example:

Huffman tree

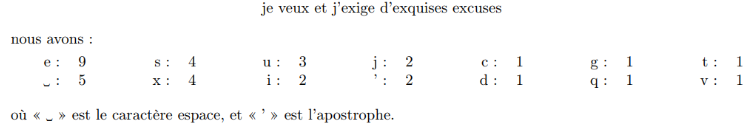

When the text to be encoded is known, it is possible to optimize the binary tree used to shorten the codes of the most frequent letters. This is what Huffman's algorithm does: it begins by counting the number of occurrences of each character in the text. For example, in the sentence:

From these frequencies, the algorithm initializes a tree per character, with the number of appearances of the character as weight:

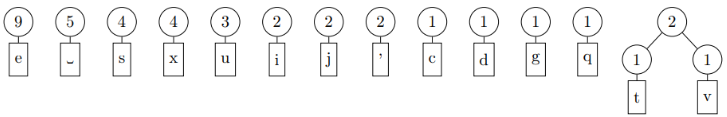

At each iteration, the algorithm selects the two trees with the lowest weights, and assembles them to form the two children of a binary tree whose weight is the sum of the two trees. When several trees are tied for the lowest weight, the tree selected among them can be any one. Thus, at the first iteration, we could obtain:

and after four more iterations:

When there is only one tree left, the algorithm is finished.

Here is an example of building the Huffman tree to better understand the process. In this example we have placed the letters in ascending order unlike the previous example:

Huffman's algorithm

More formally, Huffman's algorithm is as follows:

Coding and decoding

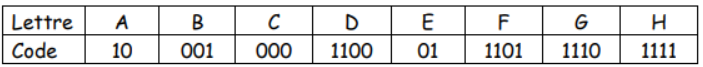

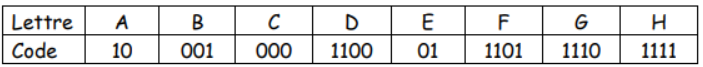

We can rewrite the tree in the form of an array. The coding of a letter is linked to the path traveled in the tree until the desired letter. Take the following table:

This type of code is said to be prefix, which means that no code is the prefix of another (the code of A is 10 and no other code starts with 10, the code of B is 001 and no other code does not start with 001). This property allows characters to be separated unambiguously.

Attention, here everything leads to believe that the proposed table is not optimal. Indeed, there are 8 characters, so using binary, we could use 3 bits to encode all the characters (2^3 = 8 possibilities).

Here, the sum of the encoded letter bits is 2+3+3+4+2+4+4+4 = 26 bits, or an average of 26/8 = 3.25 bits per character. But in fact what will count is not the average, but the frequency-weighted average as we will see in the example exercise.

Using the array, it is possible to encode a message (here the + is a concatenation):

CACHE=000+10+000+1111+01=00010000111101.

We therefore have a 14-bit message instead of 5*3=15 bits, which corresponds to a memory reduction for text storage of (15-14)/15 = 6.6%. Although on average the encoding is larger, in fact we have a reduction in the memory space used!

Example

1) We seek to encode each letter of this alphabet by a sequence of binary digits.

a) How many bits are needed to encode each of the 8 letters of the alphabet.

b) What is the length in bytes of a 1000-character message built on this alphabet?

2) Provide a fixed size code for each character of the 8-letter alphabet.

3) We now consider the following coding, the length of the code of each character being variable.

a) Using the previous table, give the message code: CACHE.

b) What is the message corresponding to the code 001101100111001.

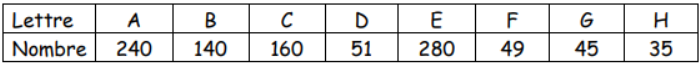

4) In a text, each of the 8 characters has a different number of occurrences. This is summarized in the following table, constructed from 1,000 character text.

a) Using the fixed size code proposed in question 2., what is the length in bits of the message containing the 1000 characters listed in the preceding table?

b) Using the code from question 3., what is the length of the same message in bits.

5) Realize the Huffman tree optimizing the memory space. Redo questions 3 and 4 with the new tree.

Correction of the example

1a – To encode the 8 letters, 3 bits are needed because 23 = 8.

1b – 1000 characters correspond to 1000*3 = 3000 bits. We divide by 8 to have the number of bytes = 375.

2 – Since with 3 bits there are 8 possibilities, we will assign each possibility to a character such as:

3a – We saw it in the text CACHE is coded by 00010000111101.

3b – We read the bits from left to right, as soon as the reading gives a letter we write it and we start looking again in the table for the corresponding character.

0 gives nothing, 00 gives nothing, 001 gives a B. 1 gives nothing, 10 gives an A, and so on. The final word is BADGE.

4a – The length would be 1000*3 = 3000 bits.

4b – To know the length with the coding table, we will multiply for each character its frequency by its encoding length: 240*2 + 140*3 + 160*3 + 51*4 + 280*2 + 49*4 + 45*4 + 35*4. That is 2660 bits with a reduction of (3000-2660)/3000 = 11% (which is really not terrible…).

5 – After performing the Huffman method, you get the following tree:

In the end, it was the table that was already provided before, so it was already optimal!