Contents

ToggleCorrected exercises: Markov chains in discrete time

This page includes many corrected exercises on Markov chains in discrete time and asymptotic behavior, class, chain ergodic, uptake.

Exercise 1

Build the markov chain corresponding to the following stochastic matrix:

0.4 | 0.6 | 0 |

0.2 | 0.5 | 0.3 |

0 | 0.4 | 0.6 |

How much class does the chain have? Is it reducible? If so, reduce it. Calculate the stationary probability, if it exists, of the irreducible chain. Calculate the periodicity of the classes.

To represent the chain, we choose to number the states from 1 to 3, in the order of the rows of the transition matrix:

To check the classes in the chain, we need to check the communicating states. Here, 1 communicates with 2 and 2 with 3 (therefore accessible in both directions), by transitivity 1 communicates with 3. The chain has only one class, it is irreducible. The states are therefore all recurrent, and are aperiodic because they all have a loop on themselves. Under these conditions, the chain admits a unique stationary probability. It suffices to solve the following system of equations:

This gives the vector (4/25, 12/25, 9/25).

Exercise 2

As for Exercise 1 with the following stochastic matrix:

0 | 0.5 | 0.5 | 0 |

0 | 1 | 0 | 0 |

0 | 0 | 0 | 1 |

0 | 0 | 1 | 0 |

We note that 2 does not communicate with anyone, it is an absorbing state. Nobody communicates with 1, the state forms a transitional class. States 3 and 4 communicate with each other, they form a recurrent class. So there are three classes {1}, {2}, {3,4}.

There is no guarantee of stationary probability. Let's solve the following system:

The system does not allow a single solution. Let Pi2 be between 0 and 1, then we find a solution:

We notice in the class {3,4} that the probabilities are equal on a frequency of 2k, we deduce that the class is periodic over a period of 2. The class {2} is absorbing, there is no period. The class {1} is transient, which is why there is no longer a population after a time k.

Exercise 3

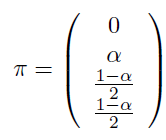

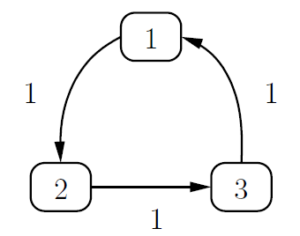

As for Exercise 1 with the following stochastic matrix:

0 | 1 | 0 |

0 | 0 | 1 |

1 | 0 | 0 |

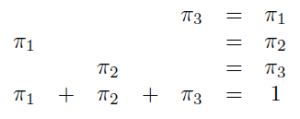

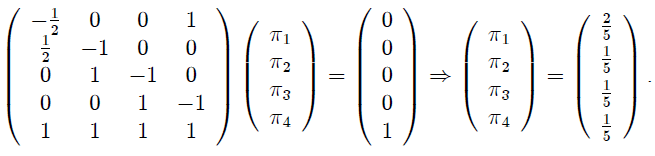

Now let's move on to the stationary probability:

Which gives the vector (1/3, 1/3, 1/3). Regarding the periodicity, we notice that the transition matrix becomes the identity matrix when it is cubed. We can therefore deduce that the Markov chain is periodic with periodicity 3. The stationary probability therefore does not exist, on the other hand we can conclude that over a large random step, we spend 1/3 of the time in each of the states.

Exercise 4

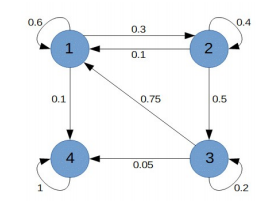

From the following three transition graphs, reconstruct the associated Markov chains (state space and matrix). Then do the analysis of these Markov chains: the classes and characteristics, stationary law, absorption probability, mean absorption times).

FIRST CHART :

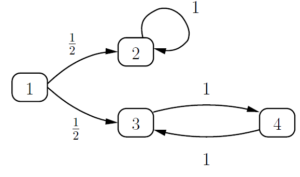

All the states communicate, so there is only one necessarily recurring class. Note that the power matrix 4 gives the identity matrix, the periodicity is therefore 4.

Now let's calculate the stationary distribution:

Since there is periodicity, we know that there is no convergence. In addition, a random step will spend ¼ of its time in each state. Regarding absorption, we have an irreducible chain, so the probability of being in the unique class is 1.

SECOND GRAPH:

All the states communicate, the states are all of the same period. The matrix seems similar to the first graph, but note that state 1 has a loop, therefore of period 1. The unique class is therefore aperiodic (of period 1).

As the chain is aperiodic and irreducible, we know that it converges towards the stationary distribution.

Since there is only one class, the calculation of absorption probabilities is simple, from any state, we always go in the same class.

THIRD GRAPH:

We have four classes

{1} which is transitory therefore no periodicity.

[2} which is recurrent with a loop therefore a periodicity of 1.

{3} same as {1}

{4} same as {2}

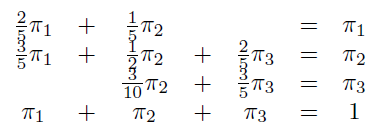

Let us calculate the stationary distribution. Given that there are transient classes, we know that there will be no convergence:

Note that there is an infinite number of solutions.

Let us calculate the absorption probabilities. Let’s start with state 2. Let’s calculate the vector v such that vi is equal to the probability that the chain is absorbed in 2 knowing that it starts at i. The calculations are simple since we have two transient classes of expectation 1 (loop-free and single-state).

We therefore know that state 2 is reached with a step of 1 maximum, this considerably reduces the number of calculations. Indeed, the probability of reaching 2 starting from 1 is known since it is p12. The transition probabilities are therefore the second column of P. Similarly the absorption probabilities of state 4 is the fourth column of P. In the general case, it is necessary to calculate the vector v such that (PI) v = 0 .

We can calculate the average absorption time. States 2 and 4 are recurrent therefore x2= x4= 0. It is quick to see that x1= x3=1+0=1.

Exercise 5

Consider the following example:

Calculate the probability of the following trajectories (h, a, f, h), (h, a, f, a), (a, a, a).

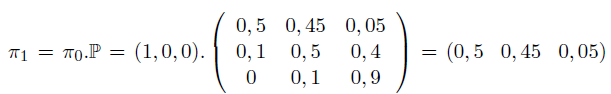

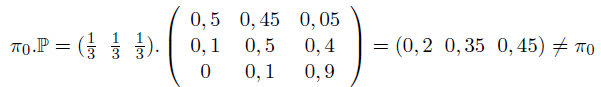

Calculate the distribution of states X1 at time t = 1 if we assume X0 = (1, 0, 0).

To interpret.

Show that a uniform distribution X0 = (1/3, 1/3, 1/3) is not a stationary distribution for this Markov chain.

Is there a stationary distribution for this Markov chain?

P (h, a, f, h) = X0 (h) * 0.45 * 0.4 * 0 = 0

P (h, a, f, a) = 0.018 * X0 (h)

P (a, a, a) = 0.25 * X0 (a)

So there is, after three iterations, half of the plot covered with grass, .45 shrubs and .05 with forest assuming that at first there is only grass.

The uniform distribution is not stationary. To calculate the stationary distribution it is necessary to solve the system XP = X:

Which gives the solution (2/53, 10/53, 41/53). We can conclude that we tend towards a majority of forest, with a density of 41/53.

Exercise 6

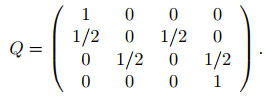

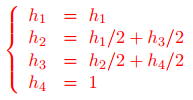

We consider a Markov chain of four states according to the following transition matrix:

Determine the classes of the chain then the probability of absorption of state 4 starting from 2.

Determine the absorption time in 1 or 4 from 2.

We notice that state 1 and state 4 are both absorbing states, forming two classes. States 2 and 3 communicate but can go in an absorbing class. We deduce that {2,3} forms a transient class.

According to the course, the absorption probability of an absorbing state k from a state i is solved by the following system:

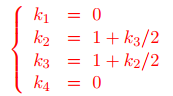

This gives the following system:

And so the solution vector (0; 1/3; 2/3; 1), h1= 0 so as not to influence the other equations. We deduce by h2 the probability of absorption of state 4 starting from 2.

According to the course, the absorption time by an absorbing state k from a state i is solved by the following system:

This gives the following system:

And so the solution vector is (0; 2; 2; 0). The absorption time K2 give the correct answer.

Exercise 7

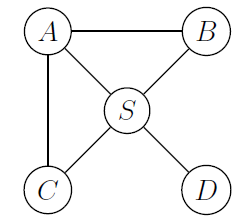

We consider a road network made up of 5 cities A, B, C, D, S as follows:

To model the road traffic, we consider that the cars take a road leaving the city in an equitable (uniform) way. Denote by Xnot the random variable representing the city reached by a car at time n.

- Justify that the probabilistic process is indeed stochastic and forms a Markov chain. And give the transition matrix.

- What is the probability that, starting from B, the car will be in C after two iterations.

- Classify states into classes and calculate their periods.

- Write the system of equations verified by stationary probabilities.

- We denote the number of routes resulting from i. Deduce a distribution of an initial population as a function of. Calculate the stationary distribution. Is she unique?

- A car is observed for a time N which is assumed to be very large. Approximately how long has she spent in a given state?

- Starting from S, what is the average number of steps it takes for the car to come back to S?

- What is the probability that, starting from S, the car visits city D before city C?

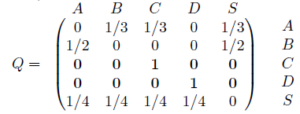

- Even knowing its entire past trajectory, the car's destination is only decided from its current position. In addition, according to the definition of the exercise, the car has a probability of 1 of leaving its current city, and therefore of taking a step towards connected cities. The choice of step is independent of the past, we are indeed in the presence of a Markov chain. The transition matrix is as follows:

- There are only two paths that go from B to C in two steps: BAC and BSC. The probability of going from B to C in two steps is therefore equal to ½ * 1/3 +1/2 * ¼ = 7/24.

- As the graph shows, all the states are linked to each other, they all communicate and therefore only form a single recurring class (therefore positive). The chain is irreducible. Regarding the periodicity, note that there are chains of all lengths from A to A. The chain is therefore aperiodic.

- See the course.

- Note that the distribution vector calculated by the number of exits from each city corresponds to the vector of stationary probabilities (1/4, 1/6, 1/6, 1/12, 1/3). This probability is unique since the chain is ergodic.

- As the chain is ergodic, we know that its asymptotic behavior is equivalent to the stationary probabilities. For example, the car will spend 1/12 of its time in state D.

- Once again, the chain is ergodic, we know that the expectation is the inverse of the stationary probability. The average time to return to S is therefore 3 steps.

- This point is much more complex to deduce. The idea is to make the Markov chain absorbent in C or D. The probability, starting from S, of reaching D is therefore the probability of being absorbed by the class {D}. First, we modify states C and D as follows:

We then have two recurrent (and absorbing) classes and a transition class containing {A, B, S}. It only remains to calculate the absorption probabilities and conclude (see the course).

Exercise 8

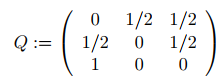

We consider a Markov chain with three states with the following transition matrix:

What are the recurrent, transient, steady state states? Calculate the time to reach each state. Calculate the period of each state. Deduce on the stationary distribution.

From state 1 we can go to state 2 and 3, from state 2 we can go to state 1 and 3, from state 3 we can go to 1 and by transitivity from 1 to 2 we deduce that 3 can go to 2. All the states communicate therefore the chain is irreducible, all the states are positive recurrent.

The chain therefore admits a unique stationary law of value (4/9; 2/9; 3/9)

The reach time in a state is the inverse of the values of the stationary law.

When we calculate Q² and Q3, we notice that the value in index (1,1) is positive, so there is no period. It is not useful to check the whole diagonal because we have shown that all the states are part of the same class.

The chain is ergodic, its asymptotic character tends towards the stationary distribution.

Exercise 9

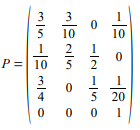

To represent the passage of a phosphorus molecule in an ecosystem, we will consider four possible states:

- the molecule is in the soil,

- the molecule is in the grass,

- the molecule was taken up by cattle,

- the molecule has left the ecosystem.

The transition matrix is as follows:

Represent the associated Markov chain. Study the Markov chain.

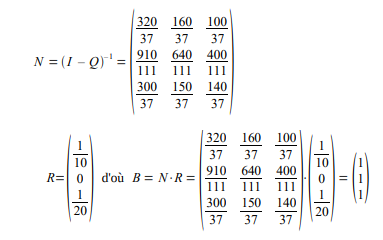

Pi = (0, 0, 0, 1) because of the absorbent state. It is possible to calculate N and B by: